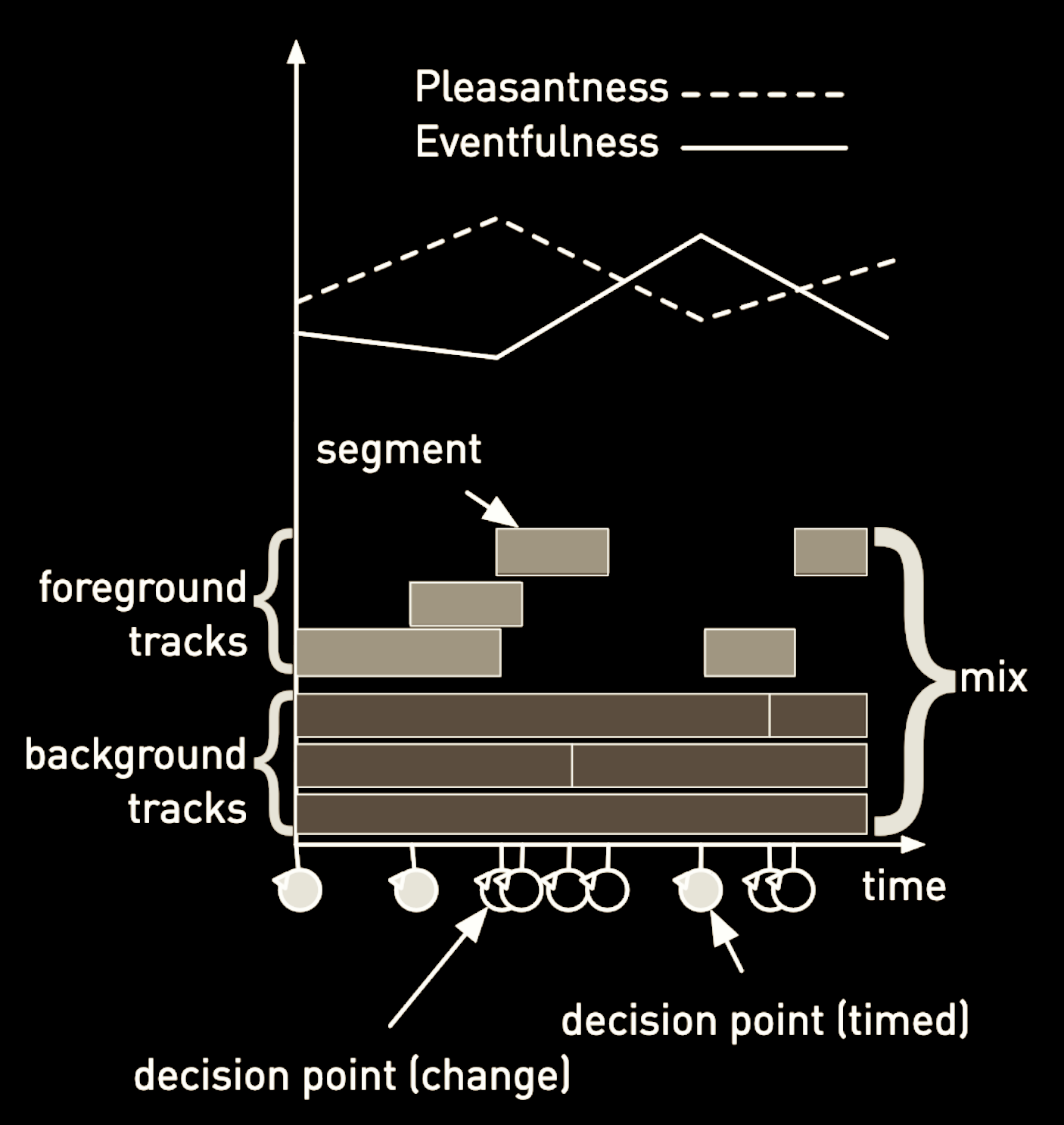

Audio Metaphor is a pipeline of computational tools for generating artificial soundscapes. The pipeline includes modules for audio file search, segmentation and classification, and mixing. The input for the pipeline is a sentence, a desired duration, and curves for pleasantness and eventfulness. Each module can be used independently, or in unison to generate soundscape from a sentence.

learn moreThe SLiCE algorithm generates a set of search permutations from prompts. Modelled after how sound artists search for sounds, these search queries are then used to create mutually exclusive sets of audio file recommendations from a database. Search is used until enough results are returned to include all word-features. Each word-feature is covered no more than once, so that when a non-empty result is returned, all other queries containing any of those word-features are filtered out.

learn moreAn audio recording can be divided into the general classes of background, foreground, and background with fore-ground sounds. Sound designers and soundscape composers manually segment audio files into building blocks for use in a composition. We use machine learning to classify segments in an audio file automatically. Based on a perceptual model, the classifier uses extracted features to classify each segment in the audio file as foreground, background, or background with foreground. Audio file classifications are saved in the database to be used as the backbone in composition by the conductor.

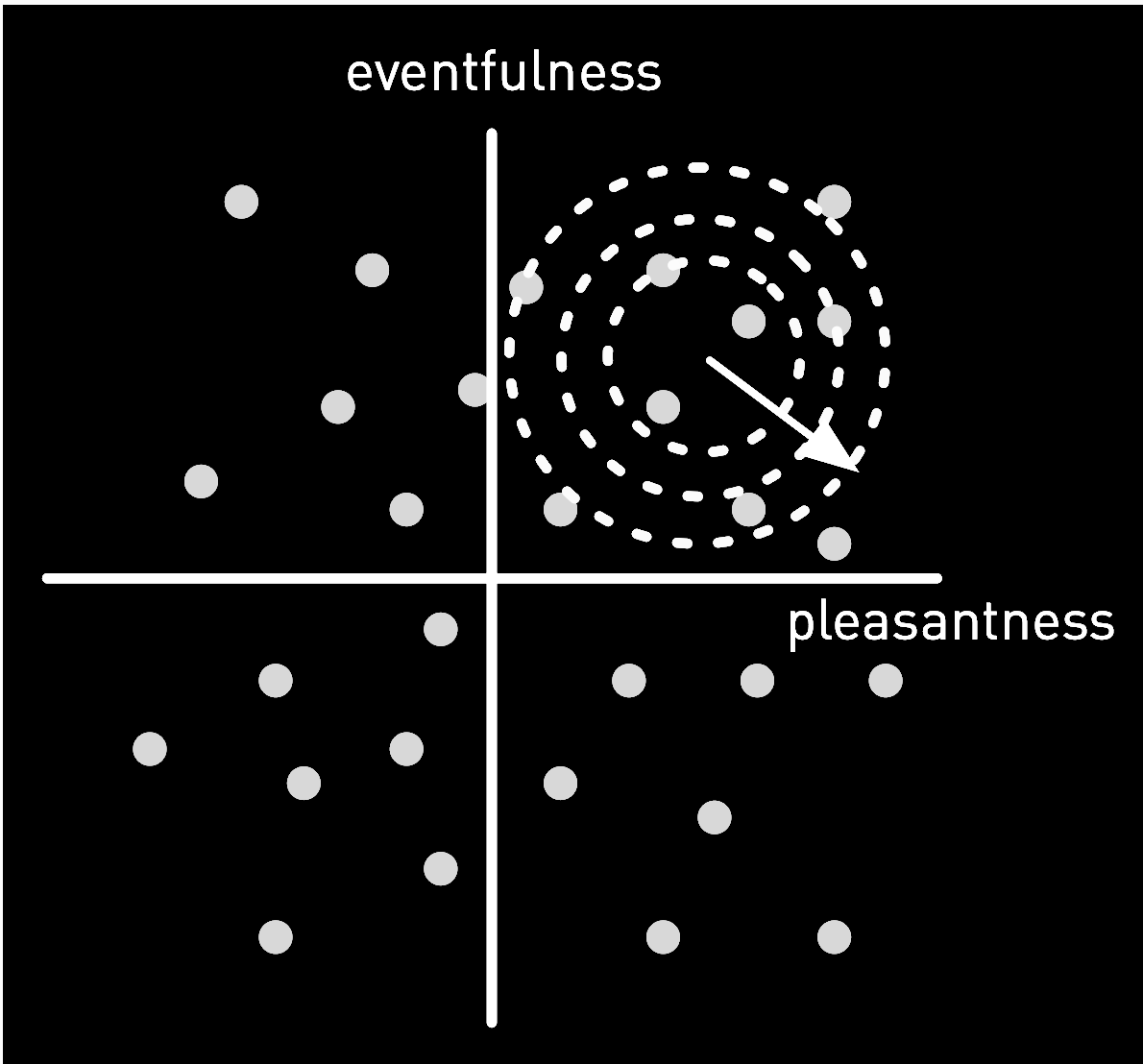

learn moreWith the ability to interpolate a circumplex model AUME retrieves audio segments evaluated on a scale of valence and arousal. This model suggests all emotions are distributed in a circular space. High levels of valence correspond to pleasant sounds while low valence levels correspond to unpleasant sounds. Further, high levels of arousal correspond to exciting sounds while low levels correspond to calming sounds. Sound designers evoke emotion from a listener by dynamically controlling valence and arousal levels throughout a com-position. We quantify levels of valence and arousal using machine learning for emotion prediction. The emotion prediction models use a subset of extracted features to predict valence and arousal for each segment in an audio file.

try Impress

The mixing engine generates a soundscape from a text-based utterance and curves for the movement of valence and arousal over the duration of the soundscape.The engine has access to a database of audio segments indexed by semantic descriptors, valence and arousal values, and background or foreground classification. The engine creates background and foreground tracks for each of the soundscape concepts and assigns sound recording segments to those tracks.

try AuME